Speech Recognition Result Format

AmiVoice API provides not only the transcribed text of the sent audio but also various other information. The information is structured and obtained in JSON format. This section explains the results obtained from AmiVoice API.

Result Structure

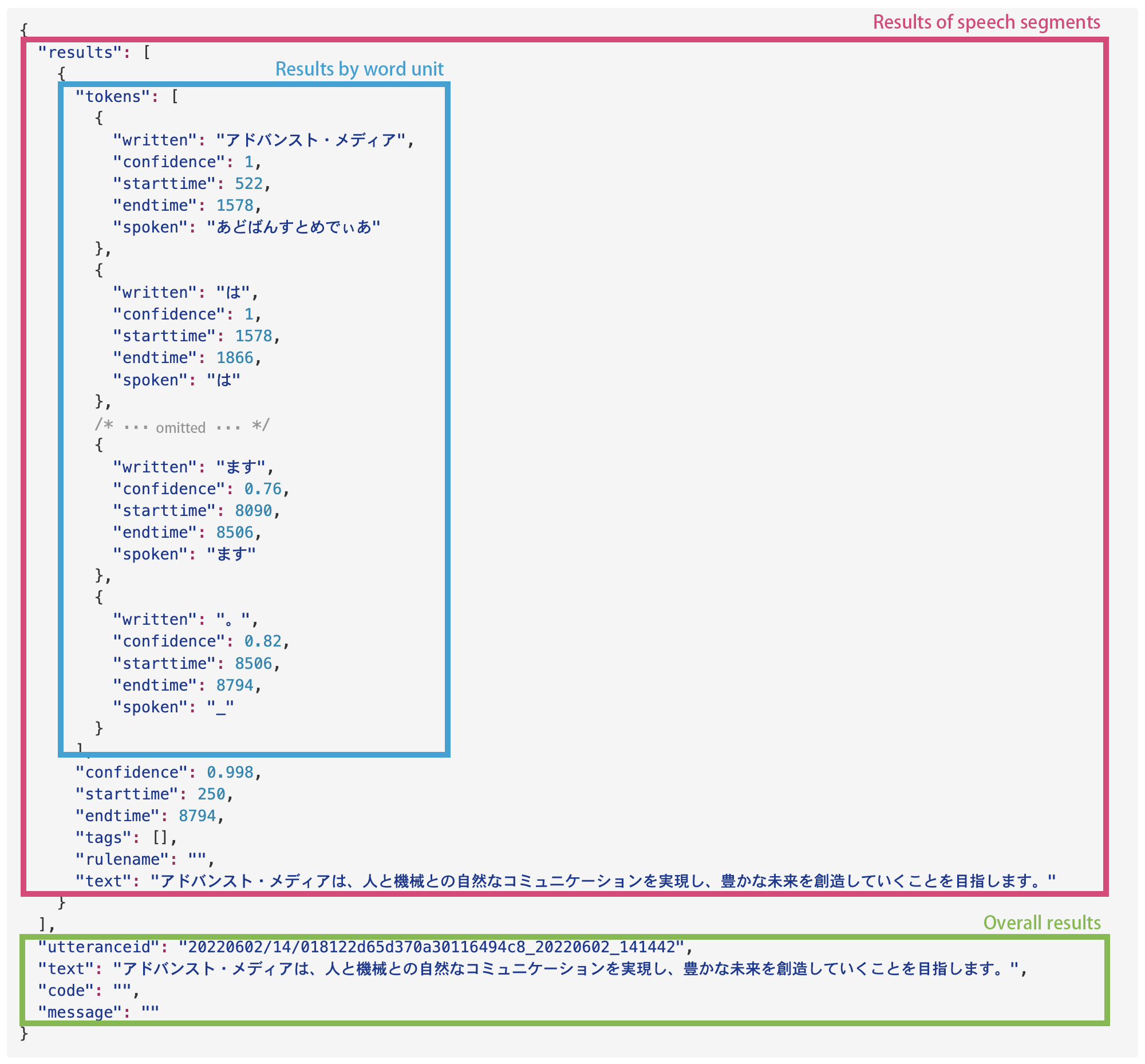

The speech recognition results obtained from AmiVoice API can be broadly divided into three parts. The following is the result for the audio file "test.wav" that comes with the sample program of the client library.

We will explain each element in order.

Overall Results

These are the results for the entire audio sent to the API.

Here's an excerpt of the overall results from the test.wav result as an example.

{

/* ... omitted ... */

"utteranceid": "20220602/14/018122d65d370a30116494c8_20220602_141442",

"text": "アドバンスト・メディアは、人と機械との自然なコミュニケーションを実現し、豊かな未来を創造していくことを目指します。",

"code": "",

"message": ""

/* ... omitted ... */

}

The overall results include the following information for each element:

| Field Name | Description | Notes |

|---|---|---|

utteranceid | Recognition result information ID | The recognition result information ID differs between WebSocket and HTTP interfaces. For WebSocket, it's the ID for the recognition result information for each speech segment. For HTTP, it's the ID for the recognition result information for the entire audio data uploaded in one session, which may include multiple speech segments. |

text | Overall recognition result text | This is the overall recognition result text that combines all the recognition results of the speech segments. |

code | Result code | This is a 1-character code representing the result. Please see Response Codes and Messages. |

message | Error message | This is a string representing the error content. Please see Response Codes and Messages. |

code and message will be empty strings when the request is successful. When it fails, the reason will be set, so please see Response Codes and Messages.

On successful recognition:

body.code == "" and body.message == "" and body.text != ""

On failed recognition:

body.code != "" and body.message != "" and body.text == ""

Speech Segment Results

An speech segment refers to the part of the audio data where a person is speaking. In AmiVoice API, speech segments are estimated by the Speech Detection process. Here, we explain the speech recognition results for each speech segment.

In the synchronous HTTP interface, speech segment results are not obtained.

Here's an excerpt of the word-level results from the test.wav result as an example.

{

/* ... omitted ... */

"results": [

{

"tokens": [/*...word-level results..*/],

"confidence": 0.998,

"starttime": 250,

"endtime": 8794,

"tags": [],

"rulename": "",

"text": "アドバンスト・メディアは、人と機械との自然なコミュニケーションを実現し、豊かな未来を創造していくことを目指します。"

}

]

/* ... omitted ... */

}

Although results is in array format, it always contains only one element. The element results[0] represents the result of the speech segment. The elements of the speech segment result include the following information:

| Field Name | Description | Notes |

|---|---|---|

results[0].tokens | Word-level results | Word-level results are obtained in array format. Details will be explained in the next chapter. |

results[0].confidence | Confidence | This is the overall confidence. The value ranges from 0 to 1, with lower confidence indicating a higher possibility of error, and closer to 1 indicating a more likely correct result. |

results[0].starttime | Start time of the speech segment | This represents the start time of the speech segment detected as the target for speech recognition processing. Please see About Time Information Included in Results. |

results[0].endtime | End time of the speech segment | This represents the end time of the speech segment detected as the target for speech recognition processing, or the end time of the segment that was actually processed for speech recognition. Please see About Time Information Included in Results. |

results[0].tags | Tags | Always blank. Currently not used, so please ignore. |

results[0].rulename | Rule name | Always blank. Currently not used, so please ignore. |

results[0].text | Speech recognition result text | This is the recognition result text for the audio contained in the speech segment. |

Difference from the values notified to the client side through the E event packet in the case of WebSocket interface

In the case of the WebSocket interface, the value notified to the client side through the E event packet is the end time of the speech segment. On the other hand, the value of results[0].endtime included in the result represents the end time of the segment that was actually processed for speech recognition within the speech segment. Therefore, the time information of the E event and the value of results[0].endtime may differ slightly, and results[0].endtime may be the same as or slightly before the time of E.

Users do not need to be aware of this difference, but please note that the time calculated from results[0].starttime and results[0].endtime is different from the time subject to billing.

Word-Level Results

Word-level results are obtained in array format in results[0].tokens. A word here refers to the unit handled by the speech recognition engine, which is different from grammatical words in language.

Here's an excerpt of the word-level results from the test.wav result as an example.

{

/* ... omitted ... */

"results": [

"tokens": [

{

"written": "アドバンスト・メディア",

"confidence": 1,

"starttime": 522,

"endtime": 1578,

"spoken": "あどばんすとめでぃあ",

"label": "speaker0"

},

{

"written": "は",

"confidence": 1,

"starttime": 1578,

"endtime": 1866,

"spoken": "は"

},

/* ... omitted ... */

]

/* ... omitted ... */

}

The elements of the word-level results include the following information:

| Field Name | Description | Notes |

|---|---|---|

results[0].tokens[].written | Word-level notation | This is the notation of the recognized word. |

results[0].tokens[].spoken | Word-level pronunciation | This is the pronunciation of the recognized word. - For Japanese engine recognition results, spoken is in hiragana. - For English engine recognition results, spoken is not pronunciation (please ignore it). - For Chinese engine recognition results, spoken is pinyin. - Not available for E2E engine |

results[0].tokens[].starttime | Start time of word-level utterance | This is the estimated time when the utterance of the recognized word started. Please see About Time Information Included in Results. |

results[0].tokens[].endtime | End time of word-level utterance | This is the estimated time when the utterance of the recognized word ended. Please see About Time Information Included in Results. |

results[0].tokens[].label | Estimated speaker label for word-level | This information is only obtained when the speaker diarization feature is enabled. It's a label to distinguish speakers, such as speaker0, speaker1, ... For details, please see Speaker Diarization. |

results[0].tokens[].confidence | Word-level confidence | This is the confidence of the recognized word. The value ranges from 0 to 1, with lower confidence indicating a higher possibility of error, and closer to 1 indicating a more likely correct result. |

results[0].tokens[].language | Word-level Language Label | This information is only obtained when using multilingual End to End engines (-a2-multi-general, -a2b-multi-general). It represents the language of the word recognized by the engine with labels such as ja (Japanese), en (English), zh (Chinese). |

Only written always exists in the word-level results. Other information varies depending on the content of the request and the specified engine. For example, label does not exist unless speaker diarization is enabled. Also, please note that for speech recognition engines other than Japanese, starttime, endtime, and confidence are not included for punctuation results.

Example of Chinese punctuation result:

{

"written": "。",

"spoken": "_"

}

For scenarios where the pronunciation of speech recognition results is needed, such as for speech synthesis or ruby annotation, the word-level reading (results[0].tokens[].spoken) can be used.

For example, the result for the utterance "きんいろのおりがみ、こんじきのがらん (Golden origami, golden temple)":

{

/* ... omitted ... */

"results": [

"tokens": [

{

"written": "金色",

"confidence": 0.99,

"starttime": 1770,

"endtime": 2666,

"spoken": "きんいろ",

},

/* ... omitted ... */

{

"written": "金色",

"confidence": 1,

"starttime": 4506,

"endtime": 5178,

"spoken": "こんじき"

},

/* ... omitted ... */

]

/* ... omitted ... */

}

While both written are "金色", spoken outputs the pronunciation estimated from each utterance in hiragana as "きんいろ" and "こんじき" respectively.

Details of Results for Each Interface

We will explain how the speech recognition results described in Result Structure are obtained for each interface, and the interface-specific results and differences.

Synchronous HTTP Interface

Results are obtained with speech segments combined into one. Even if multiple speech segments are detected, they are combined into a single result.

{

"results": [

{

"starttime": Start time of the first speech segment,

"endtime": End time of the last speech segment,

"tokens": [{Word-level result 1},...,{Word-level result N}]

"confidence": Confidence,

"tags": [],

"rulename": "",

"text": "...",

}

],

"text": "...",

"code": Result code,

"message": Reason for error,

"utteranceid": "..."

}

- The start time of the first speech segment is obtained in

starttime, the end time of the last speech segment inendtime, and all word-level results for the sent audio are obtained in array format intokens. - In the synchronous HTTP interface, only one

utteranceidis obtained.

Example:

{

"results": [

{

"tokens": [

{

"written": "アドバンスト・メディア",

"confidence": 1,

"starttime": 522,

"endtime": 1578,

"spoken": "あどばんすとめでぃあ"

},

{

"written": "は",

"confidence": 1,

"starttime": 1578,

"endtime": 1866,

"spoken": "は"

},

/* ... omitted ... */

{

"written": "ます",

"confidence": 0.76,

"starttime": 8090,

"endtime": 8506,

"spoken": "ます"

},

{

"written": "。",

"confidence": 0.82,

"starttime": 8506,

"endtime": 8794,

"spoken": "_"

}

],

"confidence": 0.998,

"starttime": 250,

"endtime": 8794,

"tags": [],

"rulename": "",

"text": "アドバンスト・メディアは、人と機械との自然なコミュニケーションを実現し、豊かな未来を創造していくことを目指します。"

}

],

"utteranceid": "20220602/14/018122d65d370a30116494c8_20220602_141442",

"text": "アドバンスト・メディアは、人と機械との自然なコミュニケーションを実現し、豊かな未来を創造していくことを目指します。",

"code": "",

"message": ""

}

Asynchronous HTTP Interface

Results are obtained for each speech segment. If multiple speech segments are detected, results are obtained for each speech segment.

{

"segments": [

{

"results": [

{

"starttime": Start time of speech segment,

"endtime": End time of speech segment,

"tokens": [{Word-level result 1},...]

"confidence": Confidence,

"tags": [],

"rulename": "",

"text": "...",

}

],

"text": "..."

},

/* ... */

{

"results": [

{

"starttime": Start time of speech segment,

"endtime": End time of speech segment,

"tokens": [...,{Word-level result N}]

"confidence": Confidence,

"tags": [],

"rulename": "",

"text": "...",

}

],

"text": "..."

}

]},

"text": "...",

"code": "...",

"message": "...",

"utteranceid": "...",

/* ... Items specific to asynchronous HTTP interface below ... */

"audio_md5": "...",

"audio_size": 0,

"service_id": "...",

"session_id": "...",

"status": "..."

}

- Unlike synchronous HTTP speech recognition, results for each speech segment (

results) are obtained in array format insegments.

To obtain results at the top level like in the synchronous HTTP interface, specify compatibleWithSync=True in the d parameter.

Items that are unique to the asynchronous HTTP interface, such as job status, are as follows:

| Field Name | Description | Notes |

|---|---|---|

session_id | Session ID | ID of the job sent to the asynchronous HTTP interface. |

status | Asynchronous processing status | Status of the job sent to the asynchronous HTTP interface. |

audio_size | Size of the requested audio | Size of the audio accepted by the asynchronous HTTP interface. |

audio_md | MD5 checksum of the requested audio | MD5 checksum of the audio accepted by the asynchronous HTTP interface. |

content_id | Content ID | ID that users can use to link data later. |

service_id | Service ID | Service ID issued for each user. |

WebSocket Interface

Speech Segment Results

Results are obtained for each speech segment. If there are multiple speech segments, a result event (A) is obtained for each speech segment.

{

"results": [

{

"starttime": Start time of speech segment,

"endtime": End time of speech segment,

"tokens": [{Word-level result 1},...,{Word-level result N}]

"confidence": Confidence,

"tags": [],

"rulename": "",

"text": "Transcription results"

}

],

"text": "...",

"code": Result code,

"message": Reason for error

"utteranceid": "..."

}

- A different ID is given to

utteranceidfor each speech segment.

Interim Results

These are interim results obtained while speech recognition is in progress. Interim results are hypotheses, and the content may change in the speech segment results.

{

"results": [

{

"tokens": [{Word-level result 1},...,{Word-level result N}]

"text": "Transcription results"

}

],

"text": "..."

}

About Result Text

Character Encoding

Results are encoded in UTF-8.

Unicode Escape

Multibyte codes such as Japanese included in the results of the synchronous HTTP interface and WebSocket interface are Unicode escaped.

Result without Unicode escape:

"text": "アドバンスト・メディアは、人と機械等の自然なコミュニケーションを実現し、豊かな未来を創造していくことを目指します。"

Result with Unicode escape:

"text":"\u30a2\u30c9\u30d0\u30f3\u30b9\u30c8\u30fb\u30e1\u30c7\u30a3\u30a2\u306f\u3001\u4eba\u3068\u6a5f\u68b0\u3068\u306e\u81ea\u7136\u306a\u30b3\u30df\u30e5\u30cb\u30b1\u30fc\u30b7\u30e7\u30f3\u3092\u5b9f\u73fe\u3057\u3001\u8c4a\u304b\u306a\u672a\u6765\u3092\u5275\u9020\u3057\u3066\u3044\u304f\u3053\u3068\u3092\u76ee\u6307\u3057\u307e\u3059\u3002"

Automatic removal of unnecessary words

Unnecessary words such as "あのー" and "えーっと" are automatically removed from the text. For details, please see Automatic removal of unnecessary words.

About time information included in the results

Values representing time, such as results[0].starttime, results[0].endtime, results[0].tokens[].starttime, and results[0].tokens[].endtime, are in milliseconds, with the beginning of the audio data as 0. In the case of the WebSocket interface, s to e represents one session, and the time is relative within the session. The reference time is reset each time a new session begins.

About the voice time to be billed

Billing for the speech recognition API is based on the speech segments. Accurate speech segment information can be obtained through the S and E events in the WebSocket interface. Please also see Differences from values notified to the client side through the E event packet in the case of WebSocket interface.